AI arms race to summon a God risks ending up like GPS: Accessible only in watered-down version for non-military applications

If the forthcoming Superintelligence can give a decisive military advantage, how likely is it they will let you use if for stuff other than making memes, sending cold emails and some basic programming?

First, I'm not asserting that this will happen; rather, I'm highlighting that the current sentiment, as gauged by the market pricing, underestimates the risk of it happening. See our scenario analysis at the end. Now, let's dive into it.

Have you heard of Leopold Aschenbrenner? If not yet, chances are that you soon will.

Aschenbrenner is a former OpenAI employee on the OpenAI Superalingment team, tasked with finding solutions on how to to steer and control AI systems much smarter than us.

Aschenbrenner has become somewhat of a whistleblower about the innate dangers emerging in the efforts to achieve Artificial General Intelligence (AGI), while also providing unparalleled insights into AI development and the likely path ahead.

In his paper SITUATIONAL AWARENESS: The Decade Ahead, Aschenbrenner states that focus in the AI industry when it comes to investments to achieve AGI has shifted "from $10 billion compute clusters to $100 billion clusters to trillion-dollar clusters".

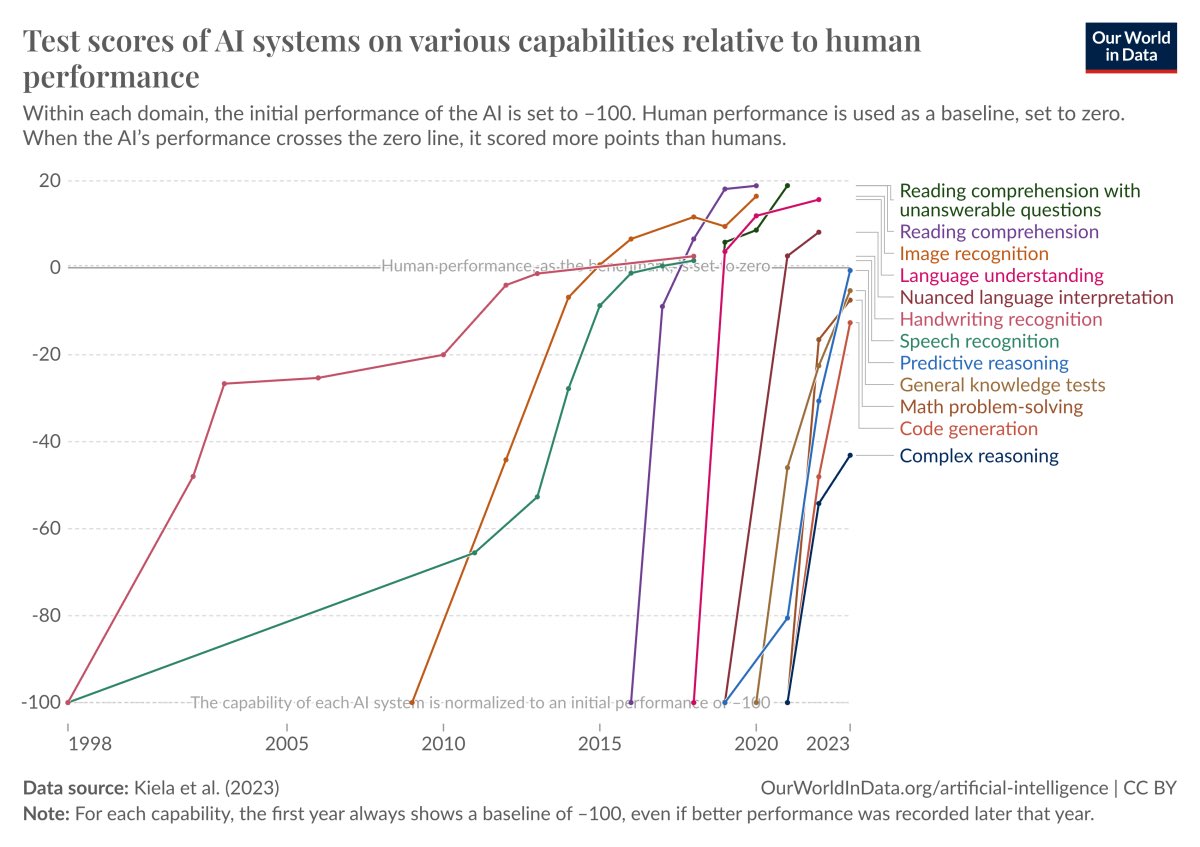

“The pace of deep learning progress in the last decade has simply been extraordinary. A mere decade ago it was revolutionary for a deep learning system to identify simple images. Today, we keep trying to come up with novel, ever harder tests, and yet each new benchmark is quickly cracked. It used to take decades to crack widely-used benchmarks; now it feels like mere months.”

“Behind the scenes, there’s a fierce scramble to secure every power contract still available for the rest of the decade, every voltage transformer that can possibly be procured. American big business is gearing up to pour trillions of dollars into a long-unseen mobilization of American industrial might.”

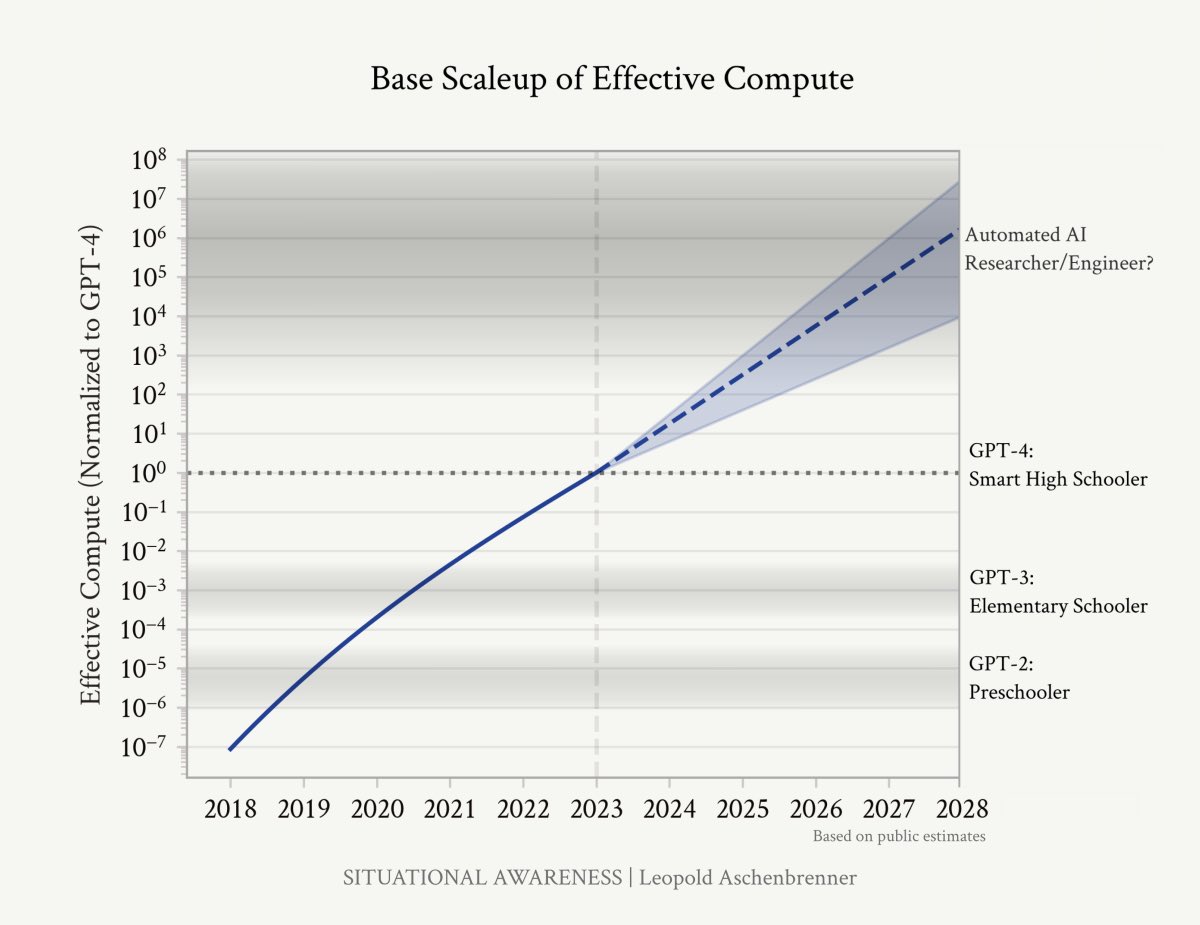

“We are building machines that can think and reason. By 2025/26, these machines will outpace many college graduates. By the end of the decade, they will be smarter than you or I; we will have superintelligence, in the true sense of the word.”

Trying to summon a God

While it may still seem a bit far away, Aschenbrenner's paper convincingly shows how we are now in only in the beginning of this "techno-capital acceleration" and why it is likely, or even inevitable, that AI development will soon surpass us.

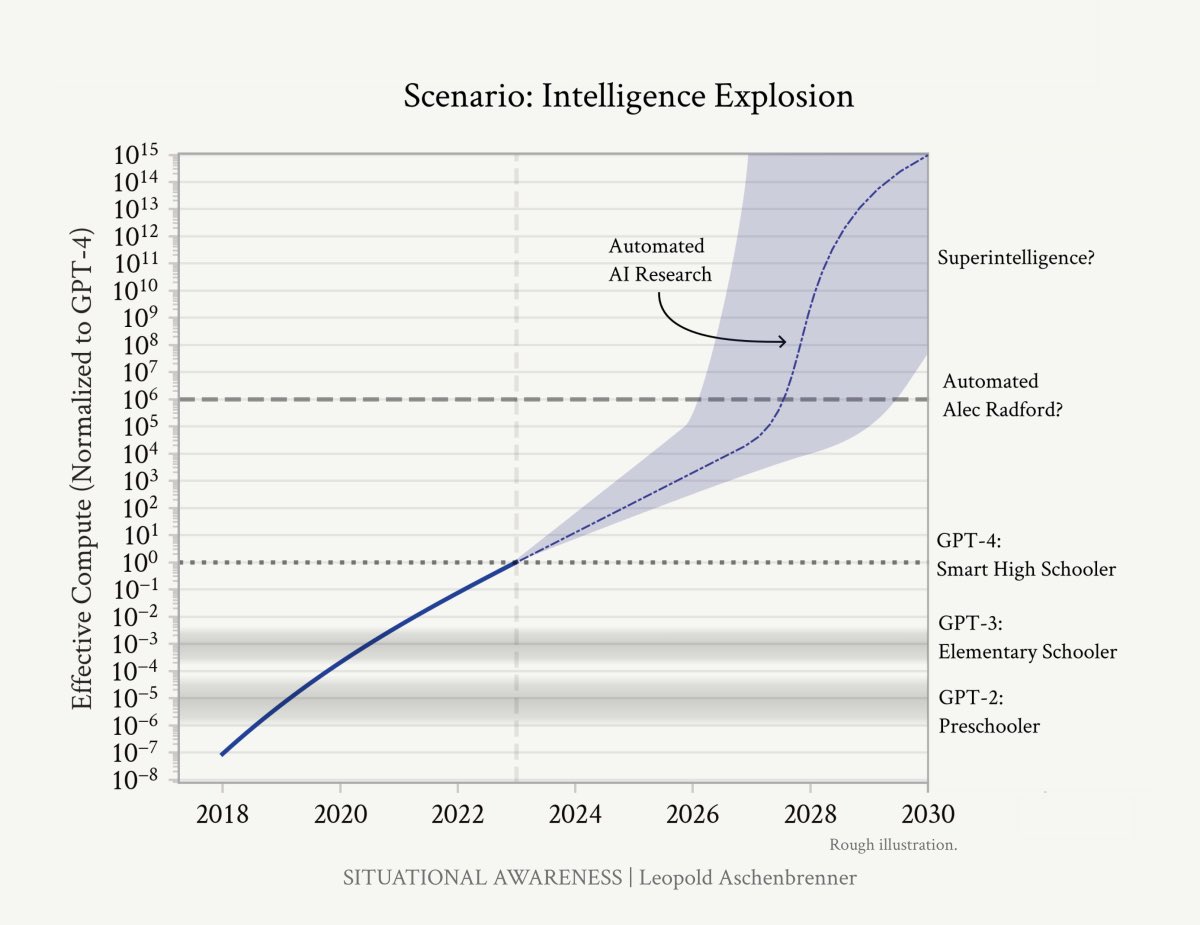

“AI progress won’t stop at human-level. Hundreds of millions of AGIs could automate AI research, compressing a decade of algorithmic progress (5+ OOMs) into ≤1 year. We would rapidly go from human-level to vastly superhuman AI systems."

“I make the following claim: it is strikingly plausible that by 2027, models will be able to do the work of an AI researcher/engineer. That doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph.”

“We don’t need to automate everything—just AI research.”

“Once we get AGI, we’ll turn the crank one more time—or two or three more times—and AI systems will become superhuman—vastly superhuman. They will become qualitatively smarter than you or I, much smarter, perhaps similar to how you or I are qualitatively smarter than an elementary schooler. “

But this is where it gets tricky, and maybe a bit naive to expect a linear progression for the whole AI industry.

“Along the way, national security forces not seen in half a century will be unleashed, and before long, The Project will be on. If we’re lucky, we’ll be in an all-out race with the CCP; if we’re unlucky, an all-out war.”

“Superintelligence will give a decisive economic and military advantage. China isn’t at all out of the game yet."

“Reliably controlling AI systems much smarter than we are is an unsolved technical problem. And while it is a solvable problem, things could easily go off the rails during a rapid intelligence explosion. Managing this will be extremely tense; failure could easily be catastrophic.”

Regulatory movement is brewing

AI has already caught the eye of regulators. For good reason. And if Aschenbrenner is anywhere close to right about the next two to five years, it is very unlikely that the government will

a/ manage to keep their fingers away

b/ accept running the risk of not winning the AI arms race, and let China get to superintelligence first.

Here's what Aschenbrenner thinks about that: “As the race to AGI intensifies, the national security state will get involved. The USG will wake from its slumber, and by 27/28 we’ll get some form of government AGI project. No startup can handle superintelligence. Somewhere in a SCIF (Sensitive Compartmented Information Facility), the endgame will be on.“

Have we seen something like this before?

Let's take a step back and think about how some other technological achievements have landed in society in general.

Take the GPS system for example. Today it's found in every phone and cars, and millions of other industry and consumer applications. It's a vital component i a lot of the stuff that drives the modern economy.

But it's capacity it severely capped.

GPS has the technological capacity to be 5-10x as accurate as the one anyone of us, or our cars or our phones, use today. If only the military didn't want to keep it for themselves.

GPS systems available to the public and industry typically have precision within a few meters.

Military or technically possible GPS systems, on the other hand, offer significantly higher accuracy, often down to centimeters, and are crucial for military operations, intelligence gathering, and precision targeting. These systems utilize encrypted signals and advanced technologies to enhance security and reliability, contrasting with the more widely accessible, but less secure, civilian GPS systems.

Just imagine where we would be with self-driving cars if the auto / EV industry had access to centimeter-accuracy GPS.

So if superintelligence can give a decisive military advantage, how likely is it they will let you use if for stuff other than making memes, sending cold emails and basic programming?

How will it play out?

We see a couple of, partially overlapping, scenarios.

Scenario A: Regulatory involvement waters down commercial capacity

Implications for society: Development of AGI and superhuman AI capability moves tighter into the hands of regulators, just like high precision satellite functionality. Public and commercial actors are left to commercialize watered-down versions.

Implications for business: The AI CAPEX cycle dwarfs other decade-long investment cycles like the Apollo program. But the prospects of actually monetizing investments are equally unclear. And if the US Government moves in to seize the best piece of the upside in terms of capability, how likely is it that commercial large-scale investments will continue in the projected pace?

Probability: We see a 25% risk of some sort of national involvement, oversight or coordination similar to capping public access to the forefront of AI technology. This in turn means we see a 20% of the big tech names scaling back on the most aggressive investments. This will not be good for Big tech and certainly not Nvidia.

Scenario B: Proliferation of AGI Development in Private Sector

Implications for society: Limited regulatory oversight allows private sectors to lead in AGI development, potentially accelerating technological advancements. However, this scenario may exacerbate societal inequalities in AI access and control.

Implications for business: AI industry sees sustained demand for advanced computing solutions amidst unchecked development. Ethical concerns and public trust issues could impact market sentiment and long-term sustainability.

Probability: There is a 35% probability of uneven benefits and ethical challenges from private sector dominance in AGI, potentially affecting the AI Industry's strategic direction and Big Tech's market positions.

Scenario C: International Collaboration and Regulation limits innovation cycles

Implications for society: Global collaboration and regulatory frameworks emerge to manage AGI responsibly, balancing innovation with safety and ethical considerations. Progress towards AGI deployment across sectors could be slower but more stable.

Implications for business: AI benefits from standardized global regulations ensuring fair competition and responsible AI deployment. However, stringent regulations may also increase operational costs and limit rapid innovation cycles.

Probability: We estimate a 40% probability of international regulations stifling innovation and fragmenting the global AI landscape, leading to geopolitical tensions and competitive disadvantages for companies operating under stricter regulatory environments.

You can see a full length interview with Leopold Aschenbrenner at the Dwarkesh Podcast here https://www.dwarkeshpatel.com/p/leopold-aschenbrenner